What is the robots.txt file used for?

Sometimes you might want to stop search engines and/or crawlers from accessing or indexing your website. You can use a robots.txt file to stop this from happening.How to create a robots.txt file

Creating a robots.txt file is easy, here are the basic steps:- Login to cPanel.

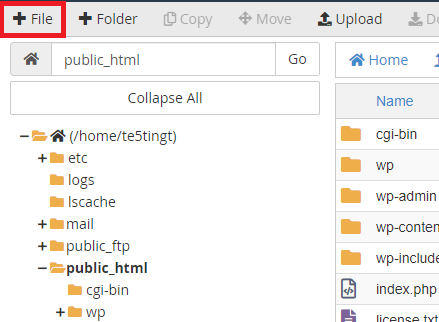

- Click on File Manager under Files.

- Navigate to the folder your website is stored in (this is normally the public_html folder).

- Click on the +File button to the top left of the page.

- In the New File Name field, set the filename as robots.txt.

- Click Create New File.

Basic Usage

Here are some examples of code/rules you can add to your robots.txt file, to achieve different things. Blocking Google from indexing anything under /blog (e.g., https://my-domain-name.com/blog) on your website:User-agent: Googlebot Disallow: /blog/ Allow all bots to access any part of your website: User-agent: * Allow: / Let the bot know where your sitemap is: Sitemap: https://example.com/sitemap.xml